Yuying Ge 葛玉莹 yyge13@gmail.com yyge13@gmail.com Google Sholar Google Sholar Github Github Shenzhen, China Shenzhen, China |

|

Biography

I am currently a Staff Research Scientist at XPENG Robotics, exploring cutting-edge technologies of embodied AI. Previously, I spent two wonderful years at

Tencent ARC Lab and Tencent AI Lab, working on multimodal foundation models.

In Aug 2023, I got my Ph.D. degree from the Department of Computer Science, The University of Hong Kong,

under the supervision of Prof. Ping Luo.

I was also a visiting student at UCSD, working with Prof. Xiaolong Wang.

We are actively looking for full-time researchers, engineers and interns to work on related topics. Please feel free to reach out if you are interested.

News

- [07/2025] We release ARC-Hunyuan-Video, a multimodal model for structured comprehension of real-world short videos.

- [06/2025] We release GRPO-CARE, introducing consistency-aware GRPO for multimodal reasoning.

- [06/2025] We release FlowAlign, using flow-based models to align learnable latent spaces to target distributions.

- [06/2025] We release AnimeShooter, multi-shot animation dataset for reference-guided video generation.

- [05/2025] We release Video-Holmes, evaluating MLLMs for complex video reasoning like Holmes.

- [04/2025] We release AnimeGamer, transforming characters from anime films into interactive entities with a MLLM.

- [04/2025] We release SEED-Bench-R1, exploring the effects of RL on video understanding with hierarchical evaluation.

- [03/2025] We release GenHancer, using imperfect generative models for enhanced vision-centric representations.

- [12/2024] We release Divot, a diffusion-powered video tokenizer for unified comprehension and generation.

- [12/2024] We release DiCoDe for autoregressive video generation with LLMs.

- [12/2024] We release MoTo, Latent Motion Tokens for autoregressive video pretraining to enhance robot manipulation.

- [12/2024] We release EgoPlan-Bench2, evaluating planning capabilities of MLLMs across various real-world scenarios.

- [07/2024] We release SEED-Story for Multimodal Long Story Generation based on SEED-X.

- [04/2024] We release SEED-X, the latest in our SEED series, which unifies multi-granularity comprehension and generation.

- [02/2024] SEED-Bench is accepted by CVPR 2024.

- [01/2024] SEED-LLaMA is accepted by ICLR 2024.

- [12/2023] We release EgoPlan-Bench, which evaluates egocentric embodied planning of MLLMs.

- [11/2023] We release SEED-Bench-2, evaluating the hierarchical capabilities of MLLMs.

- [10/2023] We release an online gradio demo of SEED-LLaMA.

- [10/2023] We release the technical report of SEED-LLaMA, which is empowered by the improved SEED-2 tokenizer.

- [08/2023] We release SEED-Bench, the most comprehensive MLLM benchmark to date.

- [07/2023] We release our SEED. Stay tuned for more updates.

- [02/2023] Three papers were accepted by CVPR 2023.

- [07/2022] One paper was accepted by ECCV 2022.

- [03/2022] One paper was accepted by CVPR 2022 as oral.

- [11/2021] One paper was accepted by IEEE TIP.

- [03/2021] Two papers were accepted by CVPR 2021.

- [03/2019] One paper was accepted by CVPR 2019.

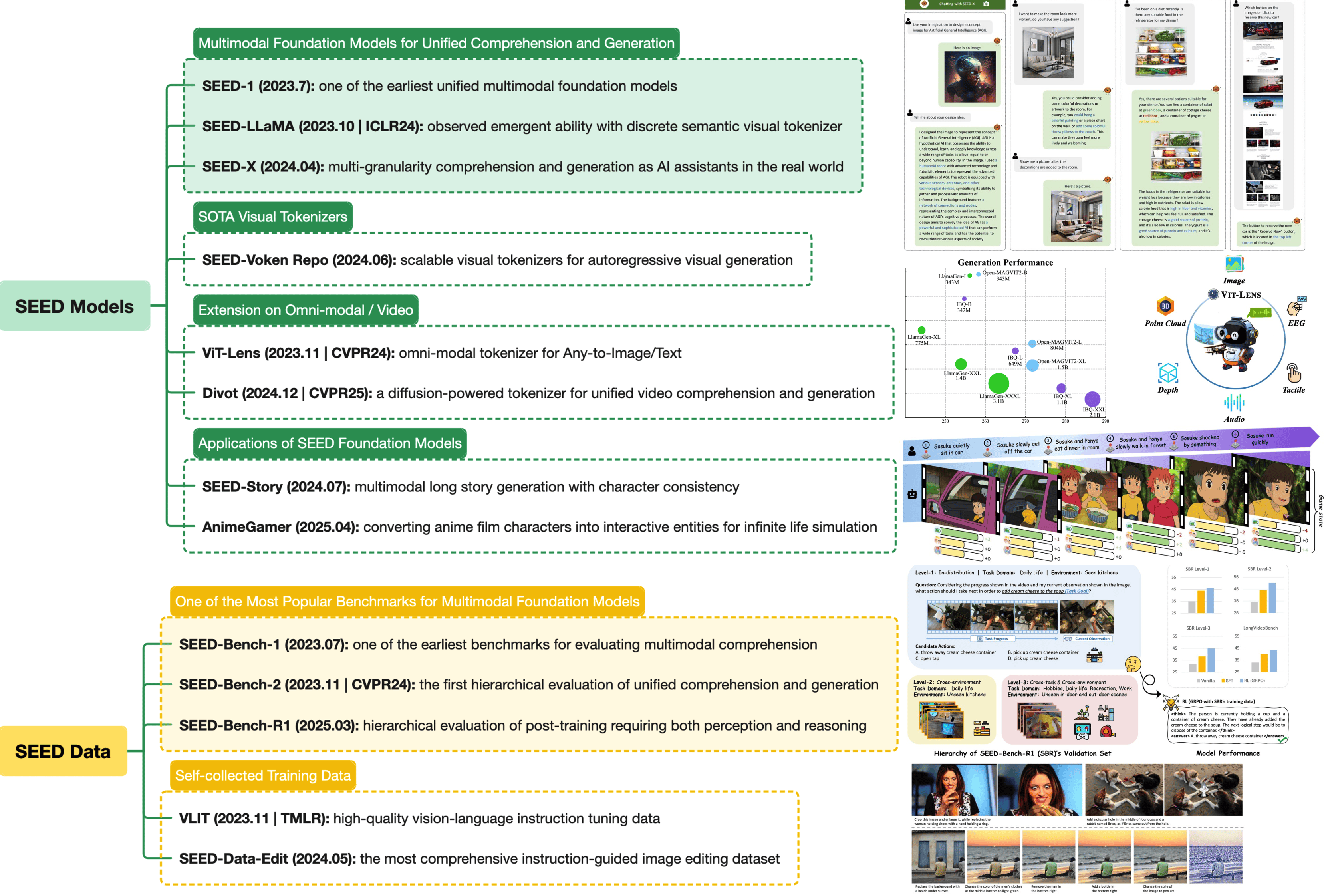

SEED Series

Since July 2023, our SEED Series has been pioneering the exploration of Multimodal Foundation Models for Unified Comprehension and Generation,

along with developing cutting-edge Benchmarks. We continuously explore real-world applications of unified foundation models, expand into broader modalities,

and enhance advanced capabilities like multimodal interleaved generation, and reasoning. All models and data are open-sourced.

Selected Publications

( *equal contribution #corresponding author / project lead )-

ARC-Hunyuan-Video-7B: Structured Video Comprehension of Real-World Shorts

Yuying Ge, Yixiao Ge, Chen Li, Teng Wang, Junfu Pu, Yizhuo Li, Lu Qiu, Jin Ma, Lisheng Duan, Xinyu Zuo, Jinwen Luo, Weibo Gu, Zexuan Li, Xiaojing Zhang, Yangyu Tao, Han Hu, Di Wang, Ying Shan

arXiv preprint, 2025. [Paper] [Project] [Code] [Model]

-

GRPO-CARE: Consistency-Aware Reinforcement Learning for Multimodal Reasoning

Yi Chen, Yuying Ge#, Rui Wang, Yixiao Ge, Junhao Cheng, Ying Shan, Xihui Liu

arXiv preprint, 2025. [Paper] [Code] [Model]

-

Aligning Latent Spaces with Flow Priors

Yizhuo Li, Yuying Ge#, Yixiao Ge, Ying Shan, Ping Luo

arXiv preprint, 2025. [Paper] [Code] [Project]

-

AnimeShooter: A Multi-Shot Animation Dataset for Reference-Guided Video Generation

Lu Qiu, Yizhuo Li, Yuying Ge#, Yixiao Ge, Ying Shan, Xihui Liu

arXiv preprint, 2025. [Paper] [Code] [Project]

-

Video-Holmes: Can MLLM Think Like Holmes for Complex Video Reasoning?

Junhao Cheng, Yuying Ge#, Teng Wang, Yixiao Ge, Jing Liao, Ying Shan

arXiv preprint, 2025. [Paper] [Code] [Project]

-

AnimeGamer: Infinite Anime Life Simulation with Next Game State Prediction

Junhao Cheng, Yuying Ge#, Yixiao Ge, Jing Liao, Ying Shan

ICCV, 2025. [Paper] [Code] [Project]

-

Exploring the Effect of Reinforcement Learning on Video Understanding: Insights from SEED-Bench-R1

Yi Chen, Yuying Ge#, Rui Wang, Yixiao Ge, Lu Qiu, Ying Shan, Xihui Liu

arXiv preprint, 2025. [Paper] [Code] [Dataset]

-

GenHancer: Imperfect Generative Models are Secretly Strong Vision-Centric Enhancers

Shijie Ma, Yuying Ge#, Teng Wang, Yuxin Guo, Yixiao Ge, Ying Shan

ICCV, 2025. [Paper] [Code] [Project]

-

Divot: Diffusion Powers Video Tokenizer for Comprehension and Generation

Yuying Ge, Yizhuo Li, Yixiao Ge, Ying Shan

CVPR, 2025. [Paper] [Code]

-

DiCoDe: Diffusion-Compressed Deep Tokens for Autoregressive Video Generation with Language Models

Yizhuo Li, Yuying Ge#, Yixiao Ge, Ping Luo, Ying Shan

arXiv preprint, 2024. [Paper] [Project] [Code]

-

Moto: Latent Motion Token as the Bridging Language for Robot Manipulation

Yi Chen, Yuying Ge#, Yizhuo Li, Yixiao Ge, Mingyu Ding, Ying Shan, Xihui Liu

ICCV, 2025. [Paper] [Code] [Project]

-

EgoPlan-Bench2: A Benchmark for Multimodal Large Language Model Planning in Real-World Scenarios

Lu Qiu, Yuying Ge#, Yi Chen, Yixiao Ge, Ying Shan, Xihui Liu

arXiv preprint, 2024. [Paper] [Code] [Project]

-

SEED-Story: Multimodal Long Story Generation with Large Language Model

Shuai Yang, Yuying Ge#, Yang Li, Yukang Chen, Yixiao Ge, Ying Shan, Yingcong Chen

arXiv preprint, 2024. [Paper] [Code]

-

EgoPlan-Bench: Benchmarking Multimodal Large Language Models for Human-Level Planning

Yi Chen, Yuying Ge#, Yixiao Ge, Mingyu Ding, Bohao Li, Rui Wang, Ruifeng Xu, Ying Shan, Xihui Liu

arXiv preprint, 2024. [Paper] [Code] [Project]

-

SEED-X: Multimodal Models with Unified Multi-granularity Comprehension and Generation

Yuying Ge*, Sijie Zhao*, Jinguo Zhu*, Yixiao Ge, Kun Yi, Lin Song, Chen Li, Xiaohan Ding, Ying Shan

arXiv preprint, 2024. [Paper] [Code] [Demo]

-

SEED-Bench: Benchmarking Multimodal Large Language Models

Bohao Li*, Yuying Ge*, Yixiao Ge, Guangzhi Wang, Rui Wang, Ruimao Zhang, Ying Shan

CVPR, 2024. [Paper] [Code] [Data] [Leaderboard]

-

Making LLaMA SEE and Draw with SEED Tokenizer

Yuying Ge*, Sijie Zhao*, Ziyun Zeng, Yixiao Ge, Chen Li, Xintao Wang, Ying Shan

ICLR, 2024. [Paper] [Code]

-

Planting a SEED of Vision in Large Language Model

Yuying Ge*, Yixiao Ge*, Ziyun Zeng, Xintao Wang, Ying Shan

Technical Report, 2023. [Paper] [Code]

-

Policy Adaptation from Foundation Model Feedback

Yuying Ge, Annabella Macaluso, Li Erran Li, Ping Luo, Xiaolong Wang

CVPR, 2023. [Paper] [Project] [Code]

-

Learning Transferable Spatiotemporal Representations from Natural Script Knowledge

Ziyun Zeng*, Yuying Ge*, Xihui Liu, Bin Chen, Ping Luo, Shu-Tao Xia, Yixiao Ge

CVPR, 2023. [Paper] [Code]

-

MILES: Visual BERT Pre-training with Injected Language Semantics for Video-text Retrieval

Yuying Ge, Yixiao Ge, Xihui Liu, Alex Jinpeng Wang, Jianping Wu, Ying Shan, Xiaohu Qie and Ping Luo

ECCV, 2022. [Paper] [Code]

-

Bridging Video-text Retrieval with Multiple Choice Questions

Yuying Ge, Yixiao Ge, Xihui Liu, Dian Li, Ying Shan, Xiaohu Qie and Ping Luo

CVPR, 2022 (oral). [Paper] [Project] [Code]

-

Parser-Free Virtual Try-on via Distilling Appearance Flows

Yuying Ge, Yibing Song, Ruimao Zhang, Chongjian Ge, Wei Liu, and Ping Luo

CVPR, 2021. [Paper] [Code]

-

DeepFashion2: A Versatile Benchmark for Detection, Pose Estimation, Segmentation and Re-Identification of Clothing Images

Yuying Ge, Ruimao Zhang, Xiaogang Wang, Xiaoou Tang, and Ping Luo

CVPR, 2019. [Paper] [Data]

Education

Ph.D., Department of Computer Science, The University of Hong Kong, 2019 - 2023

Bachelor, University of Electronic Science and Technology of China (UESTC) (ranking 1/525), 2014 - 2018

Experiences

Staff Research Scientist at XPENG Robotics, 2025 - Present

Senior Researcher in Tencent ARC Lab, 2024 - 2025

Senior Researcher in Tencent AI Lab, 2023 - 2024

Intern in Tencent ARC Lab, 2021 - 2022

Intern in Tencent AI Lab, 2020 - 2021

Research Assistant in Multimedia Lab (MMLab), The Chinese University of Hong Kong, 2018 - 2019

Intern in SenseTime Research, 2017 - 2018

Academic Activities

Reviewer for CVPR, ICLR, ICML, NeurIPS, ECCV, ICCV, TPAMI, TNNLS, TMM, TVCJ

Organizer of DeepFashion2 Challenge Clothes Landmark Detection

and Clothes Retrieval in 2019, 2020

Organizer of Third Workshop on Computer Vision for Fashion, Art and Design in CVPR, 2020

Organizer of Second Workshop on Computer Vision for Fashion, Art and Design in ICCV, 2019

© Yuying Ge | Last updated: Dec. 2021